Neural Network

Let’s say we have some input values there is a large connected network that is passing that input value through layers and there is some computation happening and we are getting the desired output. As a network where everything is connected and there are layers and neurons.

The data points inside each layer and there are functions activation functions, summation functions and computations happening and you are getting the desired output from that particular network in hindsight there is input going inside the network and you’re getting output.

A network where nodes are interconnected and there are several layers basically roughly depicts a neural network similar to a brain.

Advantages of Neural Network

High efficiency is just one of the advantages of artificial neural networks and neural networks are also known as artificial neural networks.

Machine learning was a major breakthrough in the technical world it led to the automation of monotonous and time-consuming tasks it helped in solving complex problems and making smart decisions.

Object detection requires high dimensional data being you know the images and frames. All high dimensional data and machine learning cannot be used to process the image data sets it is only ideal for data sets with a restricted number of features that’s where deep learning comes into the picture.

Machine learning is its ability to consume a lot more data handle layers and layers of abstraction and be able to see things that a simpler technology would not be able to see.

The linear model is capable of consuming around 20 variables. Deep learning technology can run thousands of data points.

Deep learning is the Center Point of Neural networks.

Layers

A layer in a neural network basically it’s storing the neuron before passing it on to the next layer. Each neuron is a mathematical operation that takes its input multiplies it by the weight associated with it and then passes the sum through the activation functions to the other neurons.

Types of Layers

There are basically three types of layers:-

- Input Layer

- Hidden Layer

- Output Layer

Input Layer

It accepts all the inputs provided by the programmer or the user.

Hidden Layer

Between the input and the output layer is a set of layers known as hidden layers and in this layer, computations are performed which result in the output so we are getting the results from these hidden layers.

Output Layer

The output is delivered by the output layer.

Weights and Biases

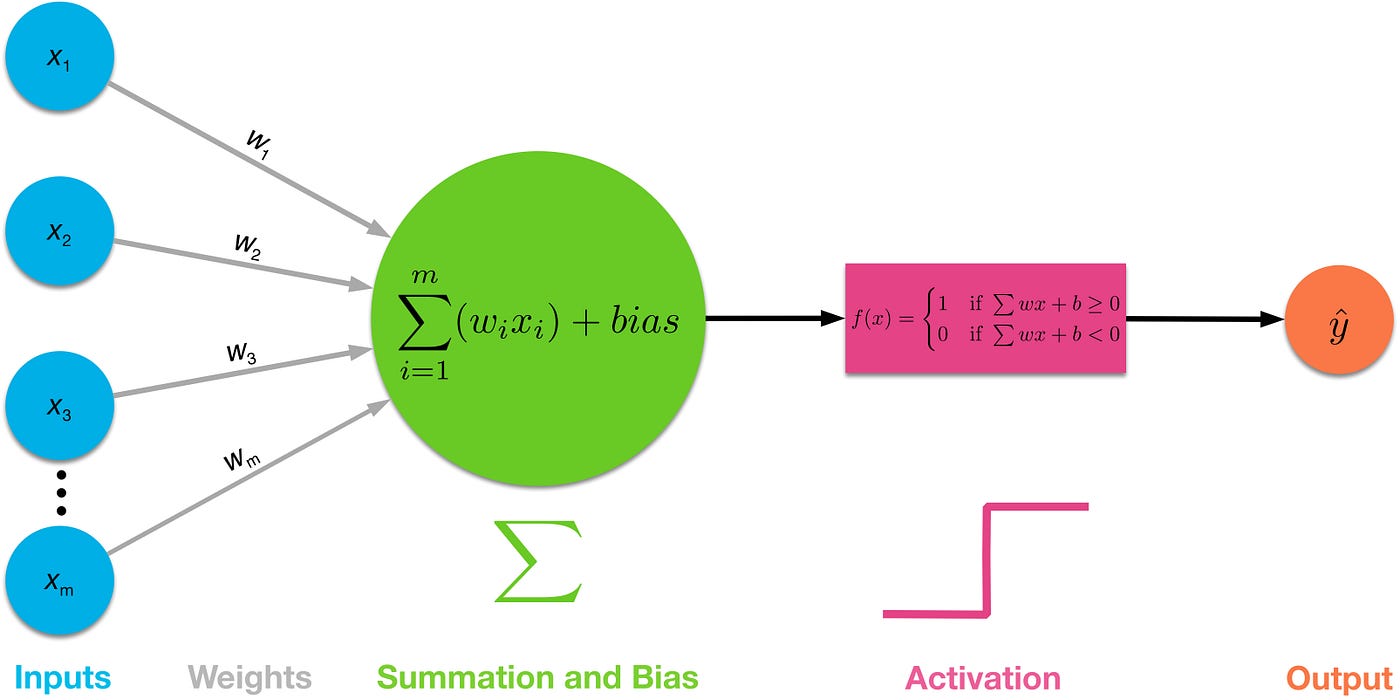

we can understand weights as a value associated with the input that basically decides how much importance that particular input has to calculate the desired output, basically the priority of the input.

Bias, is simply a constant value that is added to the weighted sum of inputs to offset the result.

Activation Function

An activation function is basically normalizing the computed input to produce an output, there can be various activation functions like there can be sigmoid function, linear function, Softmax, and RELU so these are the activation functions that we can use.

Let’s say the input values are x1 x2 x3 and so on and there is a subsequent weight associated with them which is w1 w2 and so on and down and the weighted sum will be x1 w1, x2 w2 and so on after adding the bias the weighted sum would become x1 w1 x2 w2 and so on the plus bias.

Now the output will be computed using the activation function so the output would be a sigmoid function on our calculated sum or calculated weighted sum.

Implementation of Neural Network

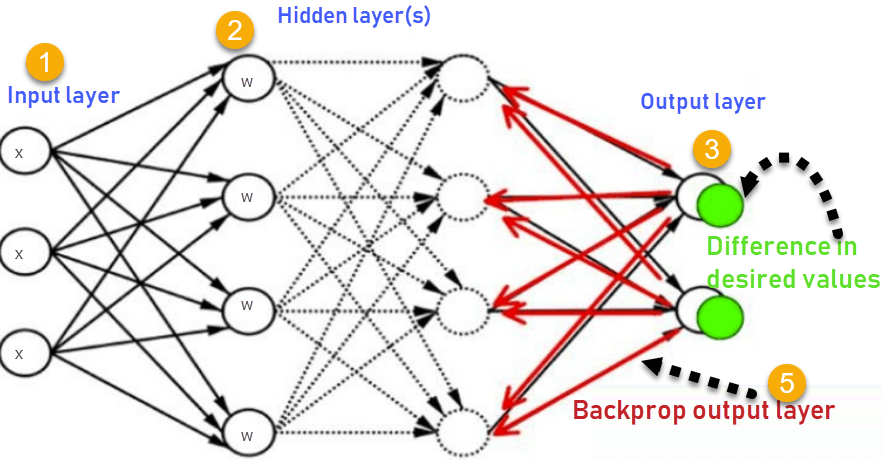

To implement a neural network there are two steps involved :

- Feed Forward

- Back Propagation

Feed-forward neural network the weights are taken randomly and the output is calculated using the activation function. These weights are actually taken at random is going to be optimized later on during backpropagation.

Feed Forward

The entire process of the input going through all the layers and getting the output is feed forward.

Back Propagation

It is the process where weights are updated to minimize the calculated error. Error is nothing but the actual output and the predictor output is compared and the difference between both of them is the error.

The flow of Neural Network

We take the inputs assign the bias and the weights associated with them and find the error in prediction after applying the activation function on the output to minimize the cost function or the error we do it by using the gradient descent algorithm and we repeat the training phase with updated weights until we get the lowest error or the lowest error in prediction.